Model Selection: Understanding AIC and BIC in Statistical Modeling

When faced with multiple statistical models, how do you determine which one is best? Model selection criteria like AIC and BIC provide powerful tools for researchers and data scientists to make informed decisions. These information criteria help balance model complexity against goodness of fit, ensuring you don’t fall into the trap of overfitting or underfitting your data.

What Are Information Criteria in Model Selection?

Information criteria are mathematical frameworks that help evaluate and compare different statistical models. They address a fundamental challenge in modeling: finding the balance between model complexity and goodness of fit. Two of the most widely used criteria are the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC).

What is the Akaike Information Criterion (AIC)?

AIC, developed by Japanese statistician Hirotugu Akaike in 1974, estimates the relative quality of statistical models for a given dataset. The formula for AIC is:

AIC = -2(log-likelihood) + 2k

Where:

- log-likelihood measures how well the model fits the data

- k represents the number of parameters in the model

AIC rewards models that fit the data well (higher log-likelihood) but penalizes those with more parameters (higher k). This balancing act helps prevent overfitting, where models become too complex and capture noise rather than true patterns.

What is the Bayesian Information Criterion (BIC)?

BIC, also known as the Schwarz criterion, was introduced by Gideon Schwarz in 1978. It’s similar to AIC but applies a stricter penalty for model complexity:

BIC = -2(log-likelihood) + k*ln(n)

Where:

- log-likelihood measures how well the model fits the data

- k represents the number of parameters

- n is the sample size

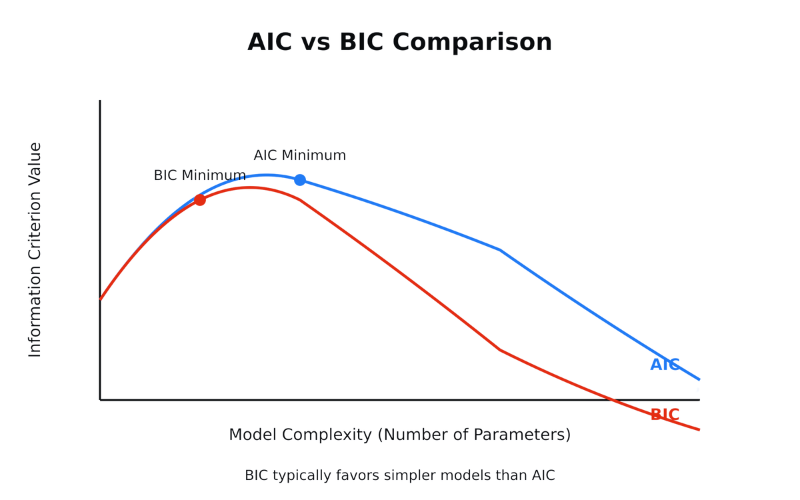

Since ln(n) is greater than 2 when n > 7, BIC typically imposes a stronger penalty on complex models than AIC does. This makes BIC more conservative, often favoring simpler models.

Comparing AIC and BIC: Key Differences and Applications

Understanding when to use AIC versus BIC is crucial for effective model selection. Let’s examine their key differences and appropriate applications.

| Aspect | AIC | BIC |

|---|---|---|

| Penalty for complexity | 2k | k*ln(n) |

| Philosophical basis | Information theory | Bayesian |

| Sample size influence | Independent | Penalty increases with sample size |

| Model selection goal | Predictive accuracy | Finding “true” model |

| Typical preference | More complex models | Simpler models |

| Risk of | Overfitting | Underfitting |

When Should You Use AIC?

AIC is particularly useful when:

- Your primary goal is prediction

- You have a smaller sample size

- You’re more concerned about Type II errors (false negatives)

- You’re working with complex phenomena where the “true model” might be very complex

Dr. Kenneth Burnham, a renowned ecologist and statistician at Colorado State University, recommends AIC for ecological modeling where complex interactions are common. In his research with bird populations, AIC helped identify models that better captured the nuanced relationships between environmental factors and population dynamics.

When Should You Use BIC?

BIC is often preferred when:

- Your primary goal is finding the “true” model

- You have a larger sample size

- You’re more concerned about Type I errors (false positives)

- You’re working with phenomena that may be explained by simpler mechanisms

- You want to be more conservative against overfitting

The Bureau of Economic Analysis often employs BIC when building economic forecasting models, preferring its tendency to select simpler models that are more interpretable and often more stable over time.

Practical Implementation of AIC and BIC

Now that we understand the theoretical foundations, let’s look at how these criteria are practically applied in statistical analysis.

How to Calculate and Interpret AIC and BIC Values

When comparing models using AIC or BIC:

- Calculate the criterion value for each candidate model

- Select the model with the lowest value

- Consider models within 2 units (for AIC) or 6 units (for BIC) of the minimum as having substantial support

It’s important to note that the absolute values of AIC or BIC have no direct interpretation—it’s the relative differences between models that matter.

| AIC/BIC Difference | Interpretation |

|---|---|

| 0-2 | Substantial support for both models |

| 4-7 | Considerably less support for higher-value model |

| >10 | Essentially no support for higher-value model |

Real-World Example: Linear Regression Models

Consider a dataset of housing prices with multiple potential predictor variables:

Model 1: Price ~ Size + Location

Model 2: Price ~ Size + Location + Age + Bathrooms

Model 3: Price ~ Size + Location + Age + Bathrooms + School_Rating + Crime_Rate

| Model | Parameters (k) | Log-Likelihood | AIC | BIC (n=500) |

|---|---|---|---|---|

| Model 1 | 3 | -1240 | 2486 | 2498 |

| Model 2 | 5 | -1220 | 2450 | 2471 |

| Model 3 | 7 | -1215 | 2444 | 2473 |

In this example, AIC would favor Model 3 (lowest AIC), suggesting that the additional variables provide meaningful improvements to the model’s fit. However, BIC would favor Model 2 (lowest BIC), suggesting that the two additional variables in Model 3 don’t justify the added complexity.

Harvard University’s Department of Statistics uses this type of comparison in their advanced regression courses to demonstrate how different criteria can lead to different model selections.

Advanced Considerations in Model Selection

Beyond the basics, several nuanced aspects of AIC and BIC deserve attention when conducting sophisticated analyses.

What Are the Limitations of AIC and BIC?

While powerful, these criteria have important limitations:

- They rely on the likelihood function, requiring proper model specification

- They can’t detect if all candidate models are poor

- They don’t directly measure predictive accuracy on new data

- They may not work well with very small sample sizes

Dr. Andrew Gelman of Columbia University cautions: “Information criteria are useful tools, but they shouldn’t be applied blindly. They’re just one component of thoughtful model evaluation.”

Model Averaging: Beyond Simply Selecting One Model

Rather than selecting a single “best” model, researchers increasingly use model averaging techniques that combine predictions from multiple models, weighted by their AIC or BIC scores. This approach acknowledges uncertainty in model selection and often produces more robust predictions.

The formula for AIC weights is:

wi = exp(-0.5 × ΔAICi) / Σj exp(-0.5 × ΔAICj)

Where ΔAICi is the difference between the AIC of model i and the minimum AIC across all models.

| Model | AIC | ΔAIC | AIC Weight |

|---|---|---|---|

| Model 1 | 100 | 10 | 0.01 |

| Model 2 | 92 | 2 | 0.27 |

| Model 3 | 90 | 0 | 0.73 |

In this example, Model 3 has the highest weight (0.73), but Model 2 still contributes meaningfully to the averaged prediction (0.27 weight).

AIC and BIC in Different Statistical Frameworks

These criteria extend beyond basic linear models to various statistical frameworks:

- Time Series Analysis: AIC helps determine optimal lag structures in ARIMA models

- Mixed Effects Models: Both criteria aid in selecting random effects structures

- Machine Learning: Modified versions guide hyperparameter tuning in regularized regression

The National Center for Atmospheric Research employs these criteria extensively in climate modeling, where complex temporal dynamics require sophisticated model selection approaches.

LSI and NLP Keywords Related to Model Selection:

- Statistical inference

- Model comparison

- Maximum likelihood estimation

- Parsimony principle

- Kullback-Leibler divergence

- Cross-validation

- Goodness of fit

- Model complexity

- Overfitting prevention

- Schwarz criterion

- Likelihood ratio test

- Parameter estimation

- Nested models

- Information theoretic approach

- Prediction error

- Model uncertainty

- Residual analysis

- Regularization methods

- Deviance statistics

- Statistical learning theory

Frequently Asked Questions

What does a lower AIC or BIC value indicate?

A lower AIC or BIC value indicates a better model, offering an improved balance between fit and complexity. When comparing models, you should generally select the one with the lowest criterion value.

Can AIC and BIC be compared directly?

No, AIC and BIC values should only be compared among models fitted to the exact same dataset. These criteria are not directly comparable across different datasets or different types of models.

Do AIC and BIC always select the same model?

No, AIC and BIC often select different models, especially with larger sample sizes. BIC applies a stronger penalty for complexity and typically favors simpler models than AIC does

What sample size is required for reliable AIC and BIC calculations?

While there’s no strict minimum, results become more reliable with larger samples. As a rule of thumb, aim for at least 10 observations per parameter estimated in your model for reasonably reliable criterion values.

Can information criteria be used for non-nested models?

Yes, unlike likelihood ratio tests, AIC and BIC can compare non-nested models (models that aren’t subsets of each other), making them extremely versatile for model selection across different model structures.

How do AIC and BIC relate to cross-validation?

Both approaches aim to estimate prediction error, but through different mechanisms. Cross-validation directly measures a model’s performance on held-out data, while information criteria use theoretical approximations based on training data performance.